August 28, 2018

In this new post, we’re taking a break from modern birding data in our birder’s series… let’s explore gorgeous drawings from a natural history collection! Armed with rOpenSci’s packages binding powerful C++ libraries and open taxonomy data, how much information can we automatically extract from images? Maybe not much, but we’ll at least have explored image manipulation, optical character recognition (OCR), language detection, taxonomic name resolution with rOpenSci’s packages.

Free natural history images and appropriate R tooling!

Free natural history images and appropriate R tooling!

A long time ago I had bookmarked the Flickr account of the Biodiversity Heritage Library (BHL). So many beautiful images of biodiversity, moreover free to use! In particular, I downloaded all pictures from one of the Birds of Australia albums.

I wanted to try to extract the bird names from images using packages of

Jeroen Ooms’, rOpenSci’s post-doc hacker & C(++)-bindings wizard. For

that I worked with magick for

image manipulation, tesseract

for optical character recognition (OCR),

cld2/cld3

for language detection… Quite the armory! We’ll also sprinkle some

taxonomy magic by Scott Chamberlain, one of rOpenSci’s co-founders, to

resolve the names extracted.

OCR bird naming workflow, piece by piece

OCR bird naming workflow, piece by piece

In this section, we explain the different elements of our R workflow: preparing images, extracting text, resolving taxonomic names.

Image preparation

Image preparation

I saved the pictures locally in a “birds” folder. Yes, I click-buttoned instead of using the Flickr API for which e.g. Jim Hester wrote a minimal R wrapper… I don’t do everything with R scripts (yet).

library("magrittr")

filenames <- fs::dir_ls("birds")

Each image shows a bird and its name. Images are either landscape- or

portrait-oriented, but for the sake of simplicity, I’ll act as if they

were all portrait-oriented. A possible easy and lazy fix here would be

to duplicate images rotated (magick::image_rotate) in all possible

directions and then to apply the workflow to all 4 versions of each

image, hoping to get good data from one of the rotated versions.

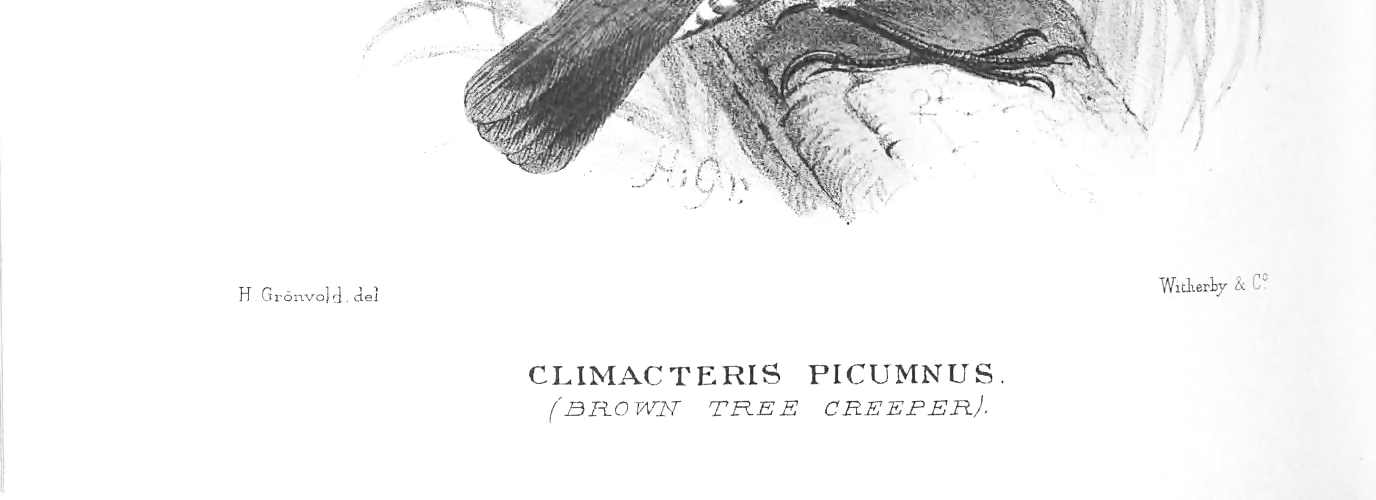

magick::image_read(filenames[1])

From that image, I wanted to extract the name indicated below the bird.

To maximize the efficiency of OCR, I shall first prepare the image,

since the accuracy of OCR depends on the quality of the input

image

which can be influenced a bit. This part could be tweaked even more, and

in real life examples it’ll be worth spending time trying different

magick functions and parameter values. Since I have in mind the case

of a bunch of images to be batch-processed, nothing is done by hand.

crop_bird <- function(filename){

image <- magick::image_read(filename)

height <- magick::image_info(image)$height

# crop the top of the image

image <- magick::image_crop(image,

paste0("+0+",round(0.75*height))) %>%

# convert the image to black and white

magick::image_convert(type = "grayscale") %>%

# increase brightness

magick::image_modulate(brightness = 120) %>%

magick::image_enhance() %>%

magick::image_median() %>%

magick::image_contrast()

# we'll need the filename later

attr(image, "filename") <- filename

return(image)

}

crop_bird(filenames[1])

It does look cleaner now!

Text extraction

Text extraction

Now is the actual OCR step! The tesseract package provides bindings to

the Tesseract OCR

engine, free

software currently sponsored by Google. It is a powerful engine, with a

ton of parameters. Here again, tweaking a lot is warranted. Particularly

useful reads are tesseract

vignette

and this Wiki page of Tesseract about improving the quality of the

output.

The hocr package might be of

interest for post-processing of OCR results.

Below, the only option changed from default is the page segmentation mode choosing 1 for “Automatic page segmentation with Orientation and script detection (OSD)”. When using Latin instead of English training data the results were not as good.

One can use either tesseract::ocr for a file path, url, or raw vector

to image, or magick::image_ocr for a magick object which is quite

handy in our pipeline.

The function below also filters results of the OCR using language

detection. By only keeping text recognized as either Latin or English by

one of the two language detection packages cld2 and cld3 that are

interfaces to Google compact language detectors 2 and 3, one gets a

first quality filter. If not doing that, the output would contain more

unusable text.

get_names <- function(image){

filename <- attr(image, "filename")

ocr_options <- list(tessedit_pageseg_mode = 1)

text <- magick::image_ocr(image, options = ocr_options)

text <- stringr::str_split(text, "\n", simplify = TRUE)

text <- stringr::str_remove_all(text, "[0-9]")

text <- stringr::str_remove_all(text, "[:punct:]")

text <- trimws(text)

text <- stringr::str_remove_all(text, "~")

text <- text[text != ""]

text <- tolower(text)

# remove one letter words

# https://stackoverflow.com/questions/31203843/r-find-and-remove-all-one-to-two-letter-words

text <- stringr::str_remove_all(text, " *\\b[[:alpha:]]{1,2}\\b *")

text <- text[text != ""]

# keep only the words that are recognized as either Latin

# or English by cld2 or cld3

if(length(text) > 0){

results <- tibble::tibble(text = text,

cld2 = cld2::detect_language(text),

cld3 = cld3::detect_language(text),

filename = filename)

results[results $cld2 %in% c("la", "en") |

results$cld3 %in% c("la", "en"),]

}else{

return(NULL)

}

}

(results1 <- filenames[1] %>%

magick::image_read() %>%

get_names())

## NULL

Nothing! Now if we replace magick::image_read with the previously

defined crop_bird function that crops and cleans the image…

(results2 <- filenames[1] %>%

crop_bird() %>%

get_names())

## # A tibble: 2 x 4

## text cld2 cld3 filename

## <chr> <chr> <chr> <chr>

## 1 climacteris picumnus <NA> la birds/n115_w1150_42399797481_o.jpg

## 2 brown tree creeper en <NA> birds/n115_w1150_42399797481_o.jpg

We get a result! So we see that the image transformation was quite useful.

Now, these names look fine, but how to be sure they’re actually taxonomic names?

Taxonomic name resolution

Taxonomic name resolution

The taxize package by Scott

Chamberlain, is a taxonomic toolbelt for R, providing access to many

fantastic data sources and tools for taxonomy. One of them, the Global

Name Resolver, provides, well, resolution of taxonomic names, sadly not

common names. taxize::gnr_resolve has many options, of which only one

is used below: best_match_only = TRUE means it’ll only return the best

match from the different data sources.

latin <- results2$text[results2$cld2 == "la"|

results2$cld3 == "la"]

taxize::gnr_resolve(latin,

best_match_only = TRUE)

## # A tibble: 1 x 5

## user_supplied_name submitted_name matched_name data_source_tit~ score

## * <chr> <chr> <chr> <chr> <dbl>

## 1 climacteris picum~ Climacteris pi~ Climacteris p~ NCBI 0.988

English names could be cleaned up a bit using the spelling

package, also an rOpenSci

package, since spelling::spell_check_text would output possible typos.

OCR bird naming workflow in action!

OCR bird naming workflow in action!

First the two steps image processing and OCR are applied to all images.

bird_names <- purrr::map(filenames, crop_bird) %>%

purrr::map_df(get_names)

Out of 51 images only 17 are present in the final table with possible names which is a bit disheartening, but one could surely do better in the image processing and OCR tweaking steps! Maybe one could frame the parameter search as a machine learning problem. Please also keep in mind that such natural history images are quite hard to parse.

The name resolution information can be added to this table.

safe_resolve <- function(text){

results <- taxize::gnr_resolve(text,

best_match_only = TRUE)

if(nrow(results) == 0){

list(NULL)

}else{

list(results)

}

}

bird_names <- dplyr::group_by(bird_names, text) %>%

dplyr::mutate(gnr = ifelse(cld2 == "la" | cld3 == "la",

safe_resolve(text),

list(NULL)))

We do not get much resolution, but we knew the names weren’t very good

to start with. A better (untested here!) idea here might be to get a

full list of names of Australian birds, potentially leveraging the

taxizedb package by Scott

Chamberlain, and to then

fuzzy-match them with the names we have.

unique(bird_names$gnr)

## [[1]]

## # A tibble: 1 x 5

## user_supplied_name submitted_name matched_name data_source_tit~ score

## * <chr> <chr> <chr> <chr> <dbl>

## 1 climacteris picum~ Climacteris pi~ Climacteris p~ NCBI 0.988

##

## [[2]]

## [1] NA

##

## [[3]]

## NULL

##

## [[4]]

## # A tibble: 1 x 5

## user_supplied_na~ submitted_name matched_name data_source_title score

## * <chr> <chr> <chr> <chr> <dbl>

## 1 austrodicaeum ii~ Austrodicaeum ~ Austrodicaeu~ The Interim Regis~ 0.75

##

## [[5]]

## # A tibble: 1 x 5

## user_supplied_name submitted_name matched_name data_source_tit~ score

## * <chr> <chr> <chr> <chr> <dbl>

## 1 melithreptus laet~ Melithreptus l~ Melithreptus ~ CU*STAR 0.988

##

## [[6]]

## # A tibble: 1 x 5

## user_supplied_na~ submitted_name matched_name data_source_title score

## * <chr> <chr> <chr> <chr> <dbl>

## 1 rad isdlvorniode Rad isdlvorni~ Rad Baker & ~ The Interim Regist~ 0.75

Conclusion

Conclusion

rOpenSci packages supporting this (and your) workflow

rOpenSci packages supporting this (and your) workflow

In this post, we made use of R packages quite useful to wrangle information from diverse formats:

We also used a function from

taxize allowing us to use the

Global Name Resolver. Discover

more packages from our suite here.

Applicability of this OCR bird naming workflow

Applicability of this OCR bird naming workflow

Actually, the BHL itself provides OCR output for its collection, see this example. I wasn’t able to find information about the software powering this OCR. What I was able to find out is that the BHL uses purposeful gaming in its OCR workflow. The raw OCR results aren’t much better than what we got in this post which is comforting.

More data from the Biodiversity Heritage Library

More data from the Biodiversity Heritage Library

If you’re interested in other types of data from the BHL, in addition to

the images, have a look at the rbhl

paclage, part of rOpenSci’s suite,

that interacts with the BHL API. One can e.g. search all books by the

same author as the one we used images from.

author <- rbhl::bhl_authorsearch("Gregory M Mathews")

books <- rbhl::bhl_getauthortitles(creatorid = author$CreatorID)

head(books$FullTitle)

## [1] "A manual of the birds of Australia,"

## [2] "A list of the birds of the Phillipian sub-region : which do not occur in Australia. "

## [3] "A manual of the birds of Australia /"

## [4] "A list of the birds of Australia : containing the names and synonyms connected with each genus, species, and subspecies of birds found in Australia, at present known to the author /"

## [5] "Austral avian record; a scientific journal devoted primarily to the study of the Australian avifauna."

## [6] "Arcana, or, The museum of natural history : containing the most recent discovered objects : embellished with coloured plates, and corresponding descriptions : with extracts relating to animals, and remarks of celebrated travellers; combining a general survey of nature."

Or we could get all books whose title contains the words “birds” and “australia”.

australia_birds <- rbhl::bhl_booksearch(title = "birds Australia")

head(australia_birds$FullTitle)

## [1] "Handbook to the birds of Australia. : [Supplementary material in Charles Darwin's copy]."

## [2] "An introduction to The birds of Australia /"

## [3] "The useful birds of southern Australia : with notes on other birds /"

## [4] "The Birds of Australia"

## [5] "The birds of Australia,"

## [6] "The birds of Australia,"

And to get the OCR results of the pages of the book we used, we could write:

library("magrittr")

# ocr=TRUE to extract OCR for all pages

rbhl::bhl_getitempages("250938", ocr = TRUE) %>%

# for each page transform the type into a string

dplyr::group_by(PageUrl) %>%

dplyr::mutate(page_type = toString(PageTypes[[1]])) %>%

# keep only the illustration pages

# that are like the ones we used

dplyr::filter(page_type == "Illustration") %>%

# from the data.frame extract the OCR

dplyr::pull(OcrText) %>%

head()

## [1] "491 \nFAL CUNCULUS LEUCOGASTER. \n( WHITE -BELLIED £ If BIKE - TIT) \nFALCUNCULUS FRONTATUS. \nSHRIKE - TIT). \n"

## [2] "492 \nA** \nOREOICA GUTTURALIS. \n(CRESTED BELL-BIRD). \n"

## [3] "APHELOCEPHALA LEUCOPSIS \n( WHITE FACE ). \n"

## [4] "* \nAPHELOCEPHALA PE CTORALIS. \n(CHE <3 TNUT -BREASTED WHITEFA CEj. \nAPHELOCEPHALA NIGRICINCTA. \n(BE A CK-BAH.DED WHITE FA CEj. \n"

## [5] "H . Gronvold. del. \nWitherLy & C° \nSPHENOSTOMA CRIS TATUM \n(WEDGE BIEL). \n"

## [6] "49 6 \nH \n(jronvolcl. del. \nN E O SIT TA LE CJ C O CE PHAI.A. \n( WHITE ¦ HE AID EE THE EH UN HE FL). \nNEOSHTA ALBATA \n(F IE E> T Ft E EE UNNEFlj. \nNEOSITTA CHRYSOPTERA \nf OFi. A. NGE - wing-e d tree runner). \nWitWLjA \n"

So there’s quite a lot to explore!

More birding soon!

More birding soon!

Stay tuned for the next post in this series, about getting and using bird taxonomic and trait data in R! In the meantime, happy birding!