June 8, 2018

For the fourth and last day of project recaps from this year’s unconf, here is an overview of the next five projects. (Full set of project recaps: recap 1, recap 2, recap 3, recap 4.)

In the spirit of exploration and experimentation at rOpenSci unconferences, these projects are not necessarily finished products or in scope for rOpenSci packages.

umapr

Summary: umapr wraps the Python implementation of UMAP to make the algorithm accessible from within R, leveraging reticulate to interface with Python. Uniform Manifold Approximation and Projection (UMAP) is a non-linear dimensionality reduction algorithm. It is similar to t-SNE but computationally more efficient.

Team: Angela Li, Ju Kim, Malisa Smith, Sean Hughes, Ted Laderas

code: https://github.com/ropenscilabs/umapr

umapr team picture by Mauro Lepore

greta

Summary: greta is an R package for writing statistical models and fitting them by MCMC. We luckily had the greta creator at the unconf: Nick Golding. The unconf team worked on contributing tutorials/vignettes to greta, including:

- linear mixed model with one random effect : https://github.com/revodavid/greta-examples/blob/master/milk.R

- linear mixed model compared to lm : https://github.com/revodavid/greta-examples/blob/master/mtcars.R

- linear mixed model based on an example from a TensorFlow Probability Jupyter notebook and compared to Edward2 HMC: https://github.com/ropenscilabs/greta/blob/unconf/vignettes/8_schools_example_model.Rmd

- linear mixed model running in parallel sessions using future R package : https://github.com/ropenscilabs/greta/blob/unconf/vignettes/election88.Rmd

In addition, they created a new type of sampler for Random Walk Metropolis Hastings (https://github.com/ropenscilabs/greta/tree/samplers).

Team: Michael Quinn, David Smith, Shirin Glander, Matt Mulvahill, Tiphaine Martin

code: https://github.com/ropenscilabs/greta/tree/unconf#work-during-ropensci-unconference-2018

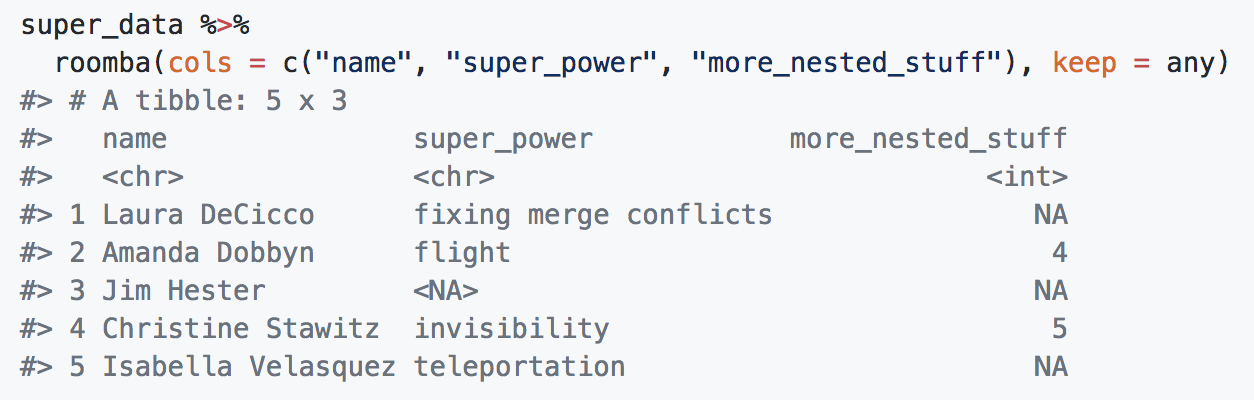

roomba

Summary: roomba is a package to transform large, multi-nested lists into a more user-friendly format (i.e. a tibble) in R. roomba::roomba() searches deeply-nested list for names and returns a tibble with the associated column titles. It handles empty values gracefully by substituting NULL values with NA or user-specified values.

Team: Amanda Dobbyn, Christine Stawitz, Isabella Velasquez, Jim Hester, Laura DeCicco

code: https://github.com/ropenscilabs/roomba

roomba team picture by Mauro Lepore

proxy-bias-vignette

Summary: Paige Bailey worked on a tutorial/vignette designed to assist with spotting and preventing proxy bias. From Paige:

Machine Learning systems often inherit biases against protected classes and historically disparaged groups via their training data (Datta et al. 2017). Though some biases in features are straightforward to detect (ex: age, gender, race), others are not explicit and rely on subtle correlations in machine learning algorithms to understand. The incorporation of unintended bias into predictive models is called proxy discrimination.

In this vignette, Paige implemented an example machine learning model using decision trees, which determines whether its classification for loan recipients is biased against certain groups.

Check out the Jupyter Notebook to get started.

Team: Paige Bailey

code: https://github.com/ropenscilabs/proxy-bias-vignette

http caching

Summary: I didn’t write much code at this unconf, but since Hadley was around, I was inspired to try to integrate httr into vcr/webmockr for HTTP request caching/mocking. I started the integration, but it’s not quite done yet. Check out development in the webmockr adapter-httr branch and examples. See also curl package integration work in webmockr adapter-curl branch

Team: Scott Chamberlain